Closed form solution of ridge regression explained | Ridge regression | Regularize linear regression - YouTube

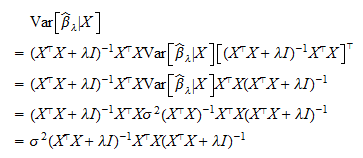

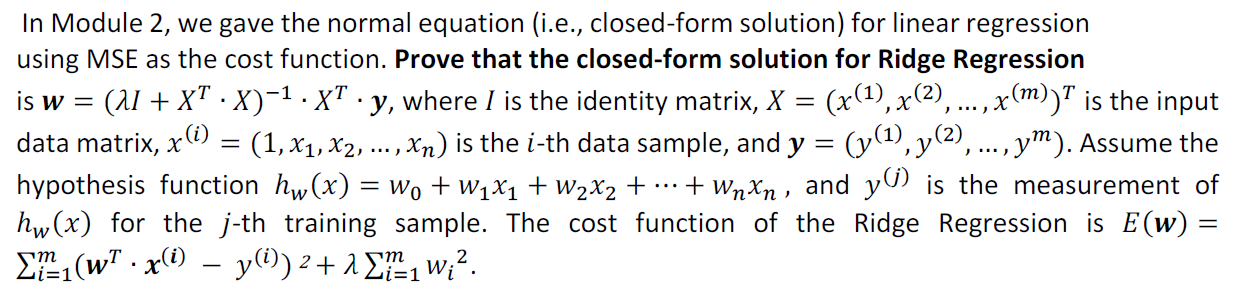

Linear Regression & Norm-based Regularization: From Closed-form Solutions to Non-linear Problems | by Andreas Maier | CodeX | Medium

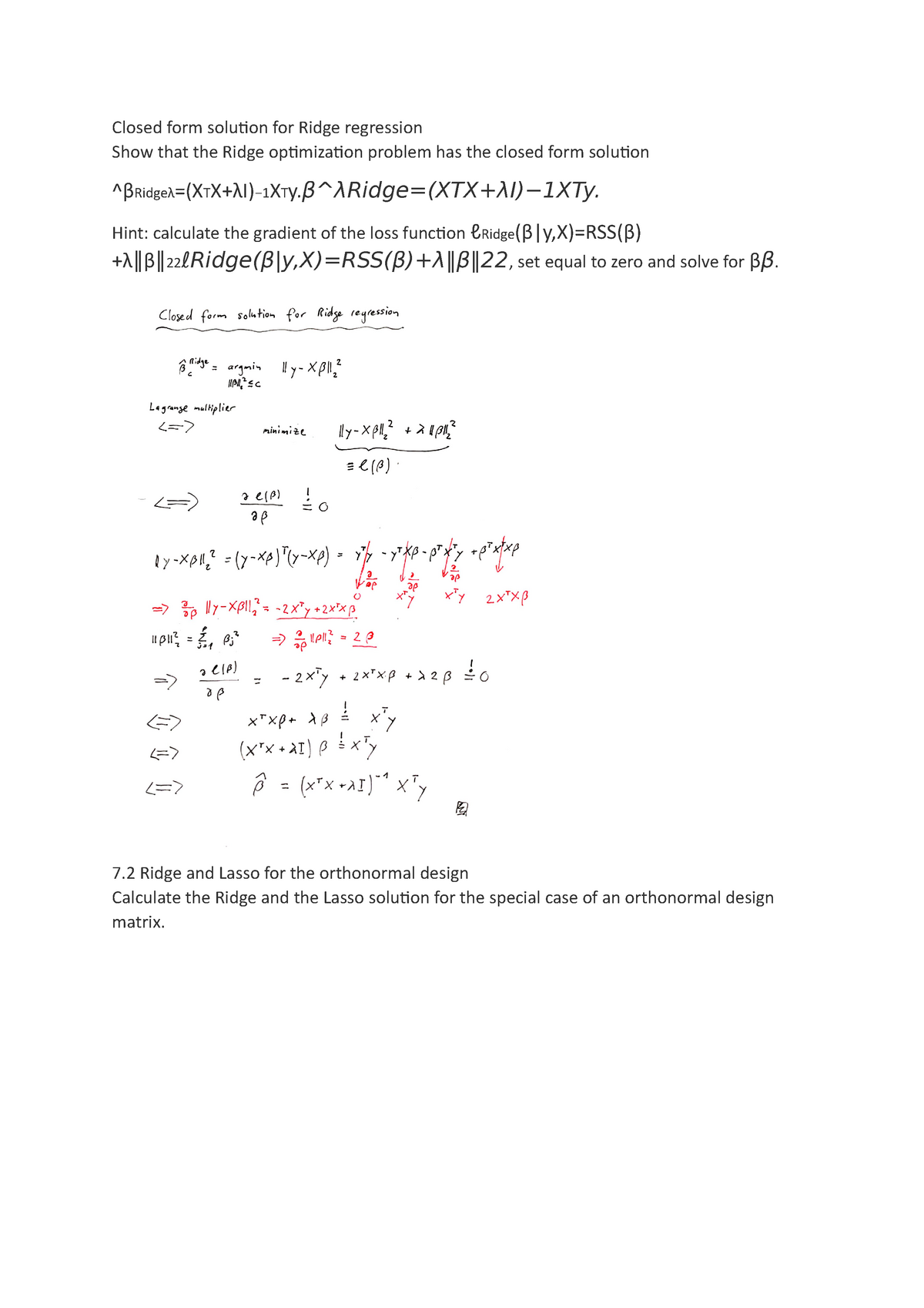

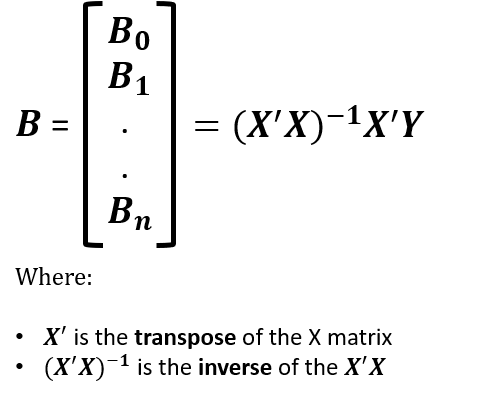

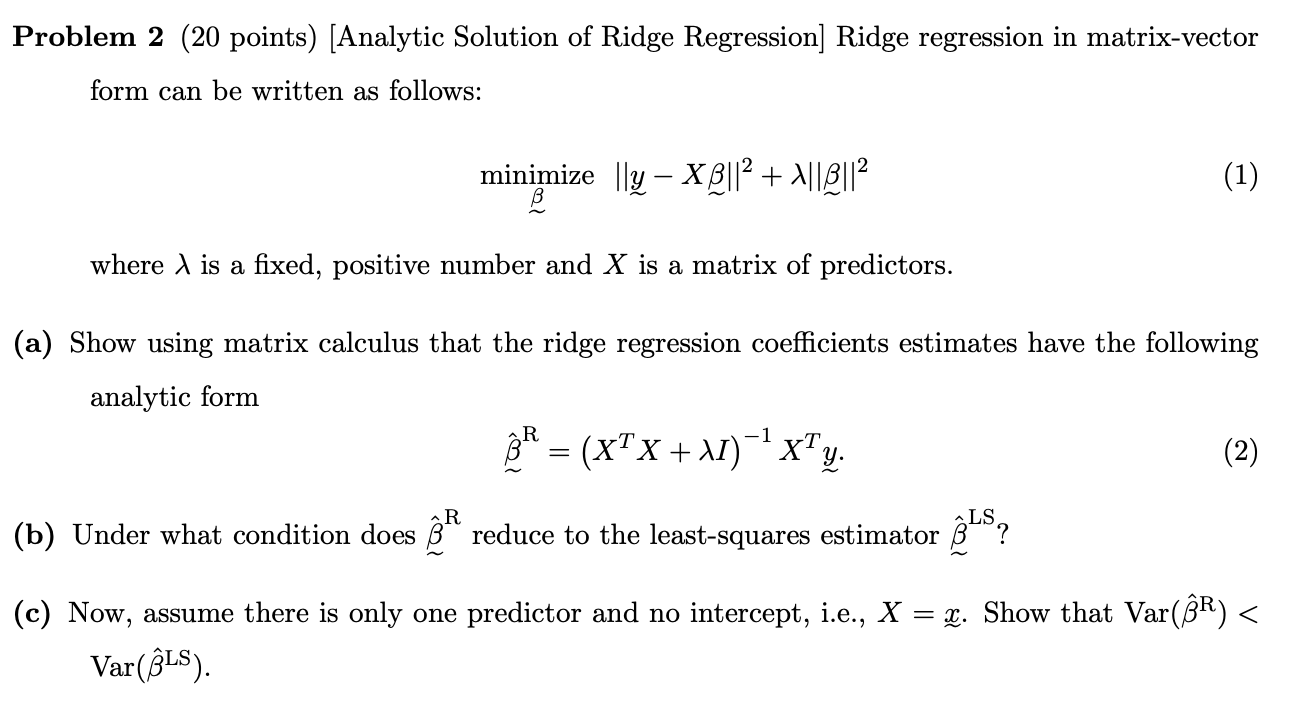

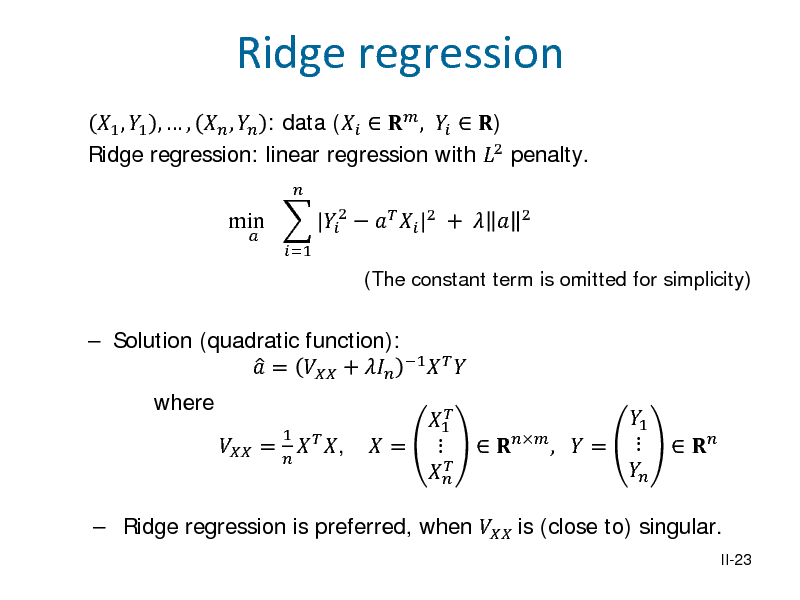

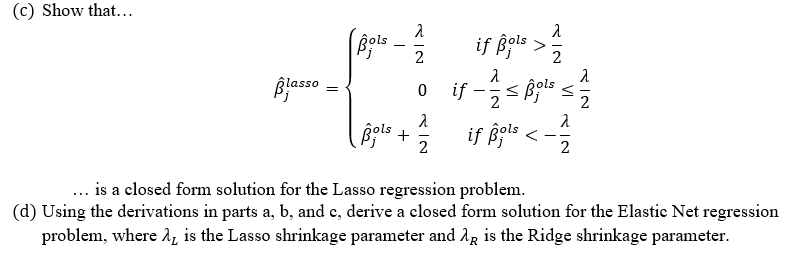

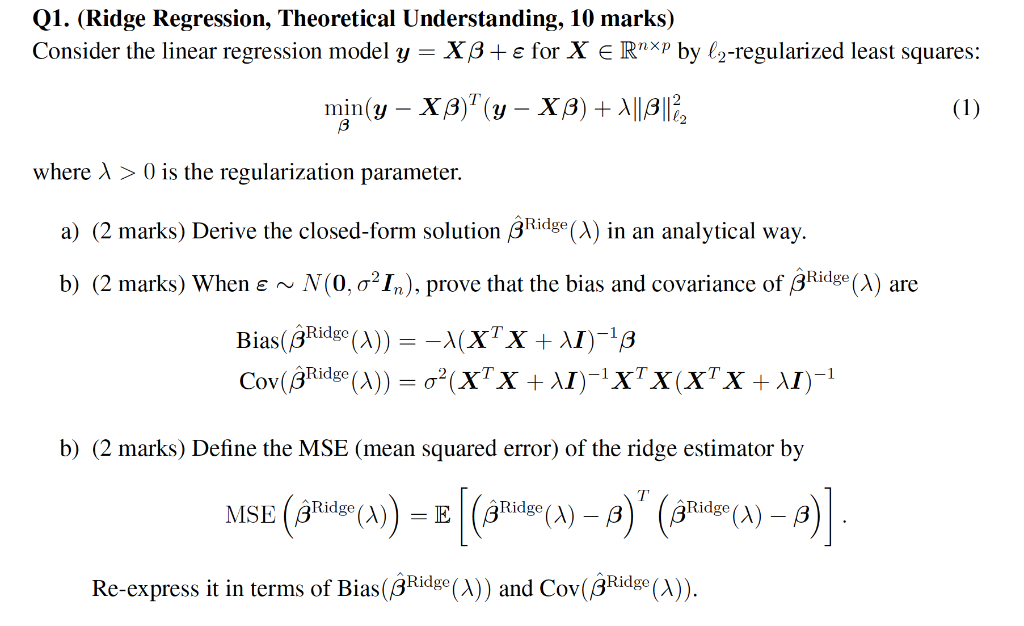

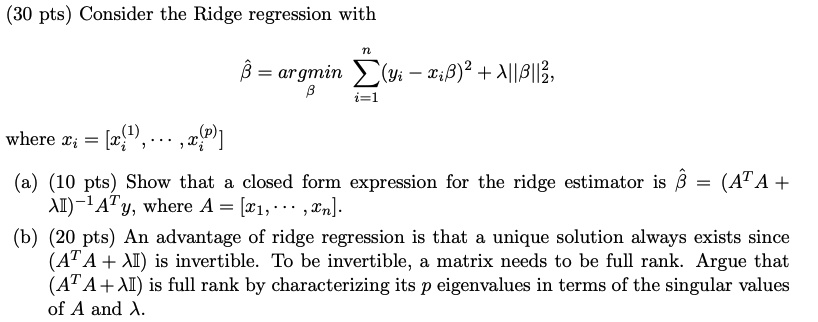

SOLVED: Consider the Ridge regression with argmin (Yi - βi)² + λâˆ'(βi)², where i ∈ 1,2,...,n. (a) Show that the closed form expression for the ridge estimator is β̂ = (Xáµ€X +

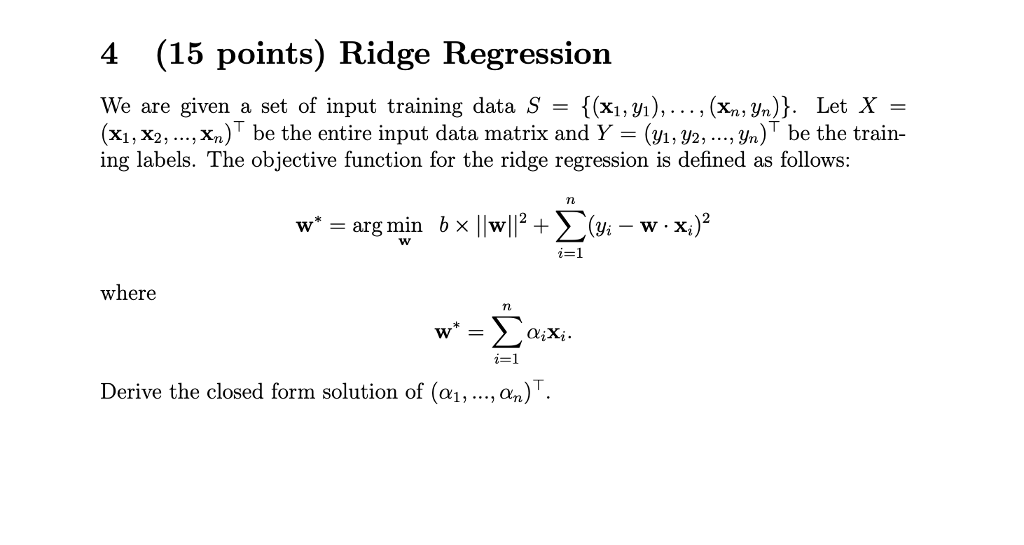

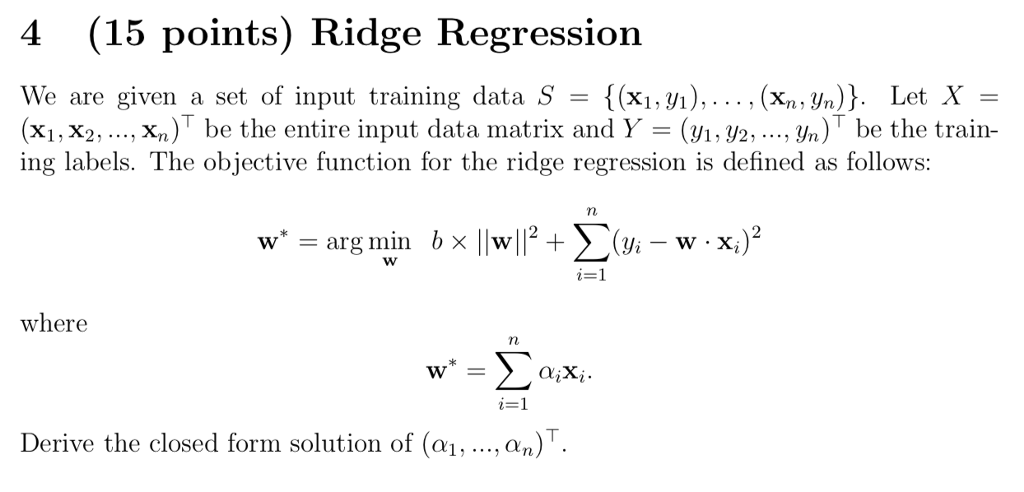

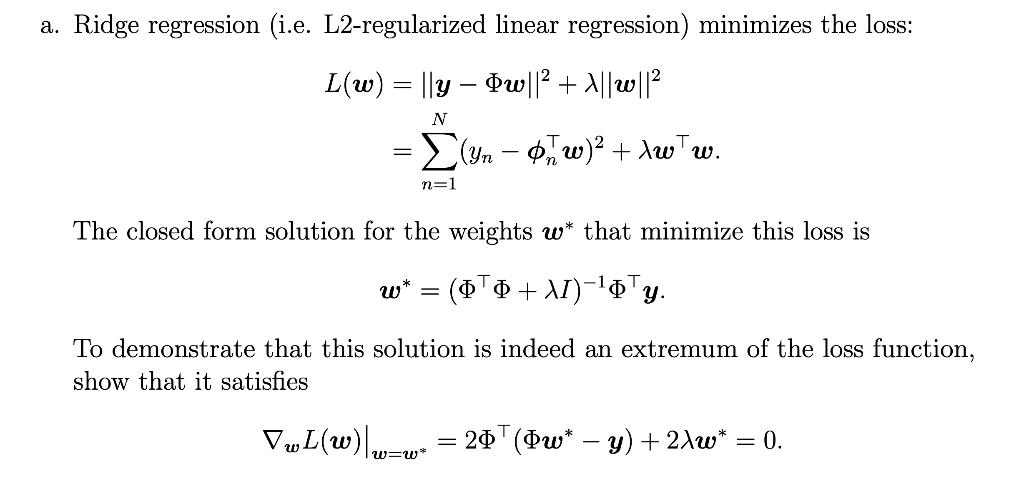

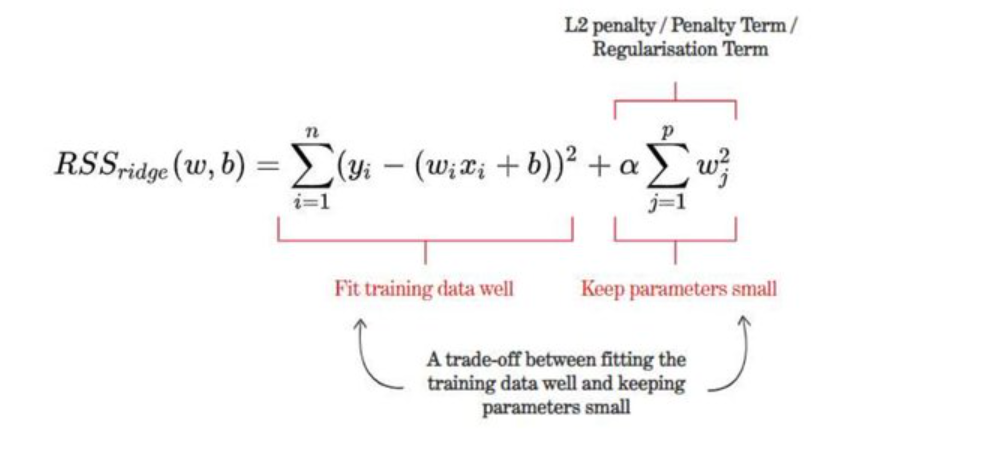

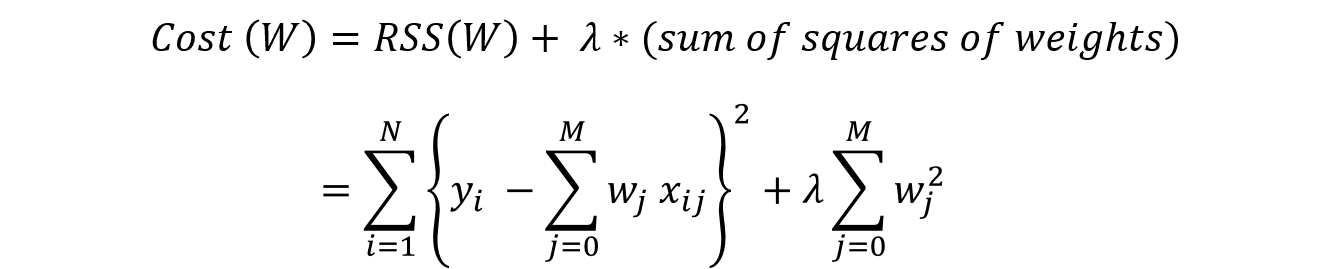

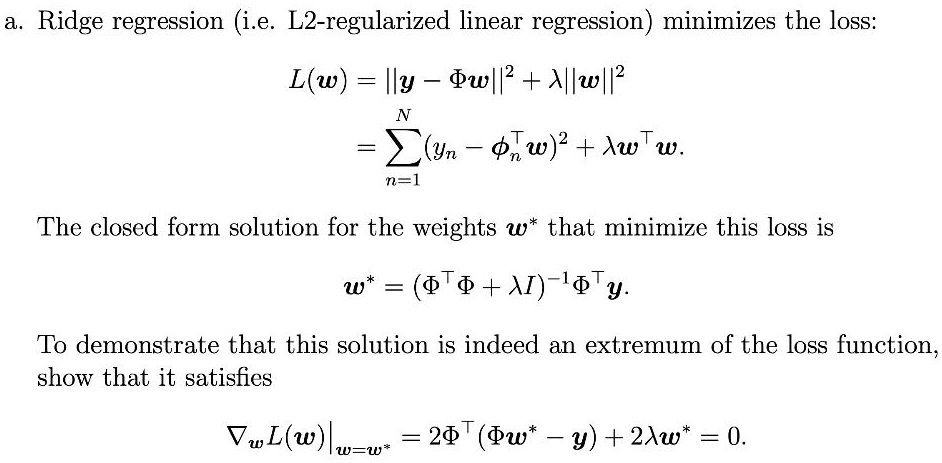

SOLVED: Ridge regression (i.e. L2-regularized linear regression) minimizes the loss: L(w) = ||y - Xw||^2 + α||w||^2, where X is the matrix of input features, y is the vector of target values,

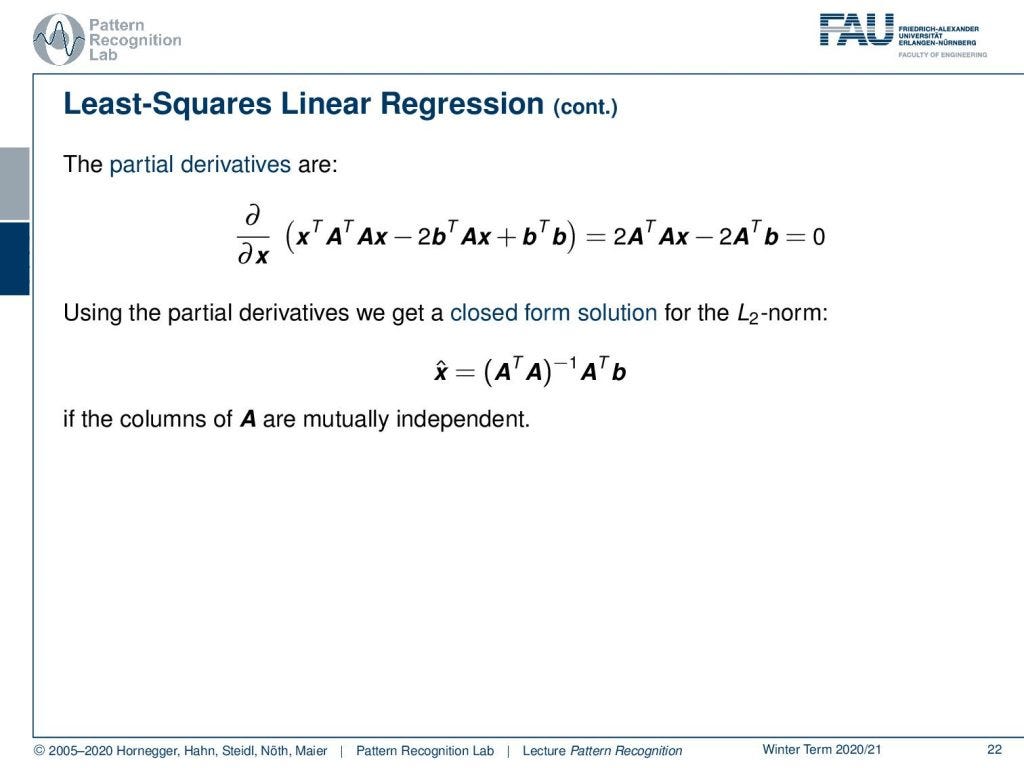

regression - Derivation of the closed-form solution to minimizing the least-squares cost function - Cross Validated